When I was a new programmer, there was one essential under-the-hood process that perplexed me for some time: how programs get executed.

Specifically, I wondered, "How does a word in English translate into something a computer understands?" I would type in a command in human-readable language (e.g. "print()"), and somehow it would run. However, I knew that computers really only understand machine language, which only consists of binary numbers (0s and 1s).

Turns out that the key player working behind the scenes here is the compiler (or interpreter, depending on the language). Once you learn how the compiler works in tandem with other key components, you'll better understand how your code can create a fully functional program. And with that, will come a deeper appreciation for the technologies you'll be using on a daily basis.

Let’s dive in!

The role of the compiler in program execution

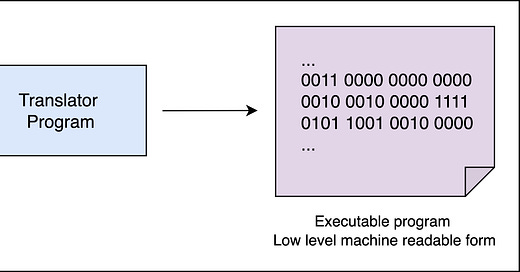

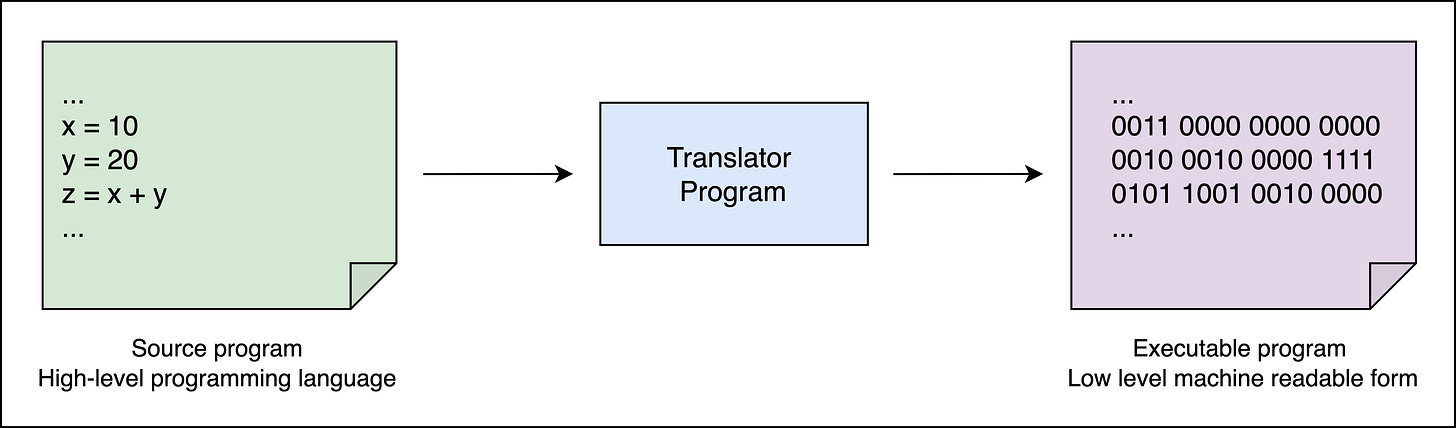

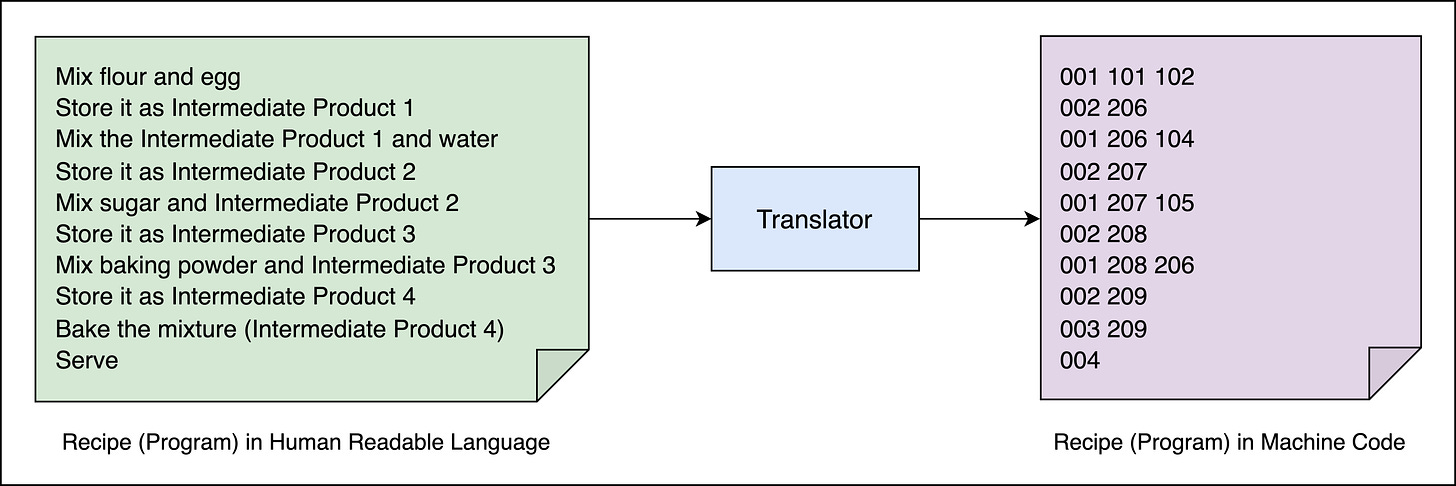

In simplest terms, we can think of the compiler as a translator.

The compiler converts high-level programming languages ( human-readable code) into binary numbers (machine-readable code), which is a set of instructions for the computer to understand.

This set of instructions is then executed by the processor, which we can think of as the "brain" of the computer that does all the thinking and math (e.g. Intel, M4).

Nearly every programmer today uses one of countless high-level programming languages (Python and Java are two popular examples). These high-level programming languages allow us to use more human-readable code, and distance ourselves from the low-level, machine-readable code that consists of binary numbers. As humans, writing in binary code is both a cumbersome and error-prone process (and quite frankly, it's not for everybody).

To aid its translation, the compiler has a static list of english commands and its binary counterparts. These lists are called instruction sets. Each processor has its own distinct machine language, and your compiler will translate your language into the machine code of the target computer hardware using its given instruction set.

Human translators don't just translate words — they also understand many semantic nuances. Similarly, compilers also have the capacity to understand the intention of the program and syntax, allowing us to more easily write sophisticated programs. For example, if you're running a loop, and you want to print something ten times, the compiler is not going to print ten print instructions. Instead, it will tell the computer that it wants to execute something ten times, which is far more efficient.

What comes after translation?

What we've learned about compilers applies to how programs are written and executed in almost all modern computers. But what comes next?

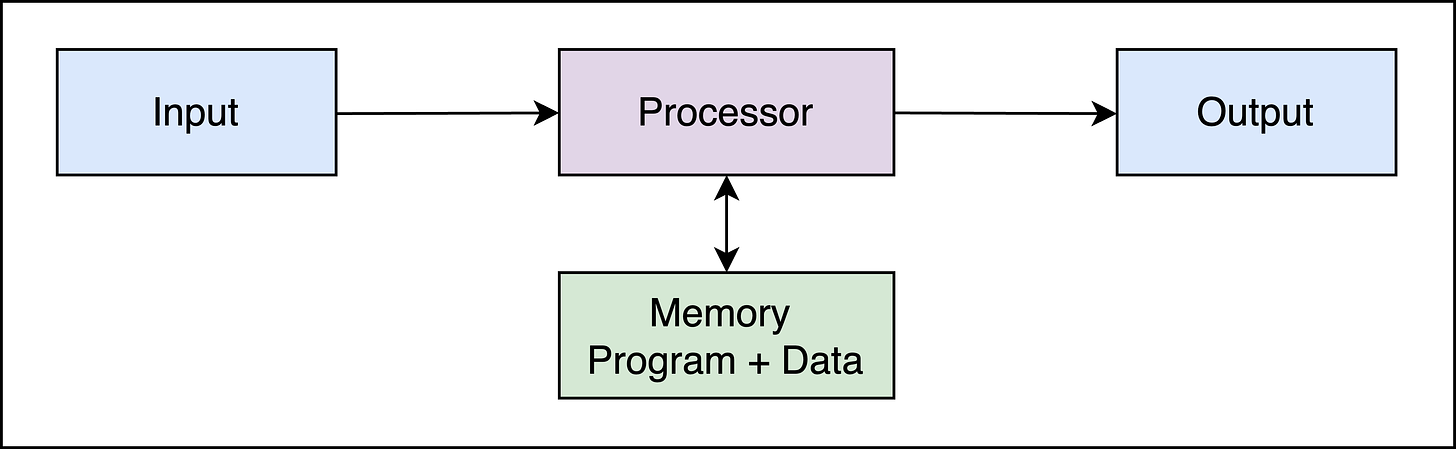

Before we get to that, let's just discuss the von Neumann architecture that applies to most modern computers today, which consists of these main components:

Processor: The core component responsible for executing logical instructions and performing arithmetic calculations.

Memory: Where the computer stores information and instructions, like a big filing cabinet.

Input devices: How we tell the computer what to do (e.g. keyboard or mouse).

Output devices: How the computer presents results after processing the data (e.g. screen or printer).

Each of these components are essential players in the execution of a program. The processor, in particular, takes center stage after receiving instructions from the computer, orchestrating the entire process from code to tangible results.

(Depicted above: von Neumann Architecture - The Stored Program Concept)

Once translated, programs can then be loaded into the computer memory. From there onwards, the processor executes them through what we call the read-execute-store cycle. In this process, each instruction is read, executed, and the results are stored back in memory for later use. This process continues until the last instruction is executed.

A practical example

Let's imagine we want to build a program that can follow recipes to prepare meals in a simulated game environment.

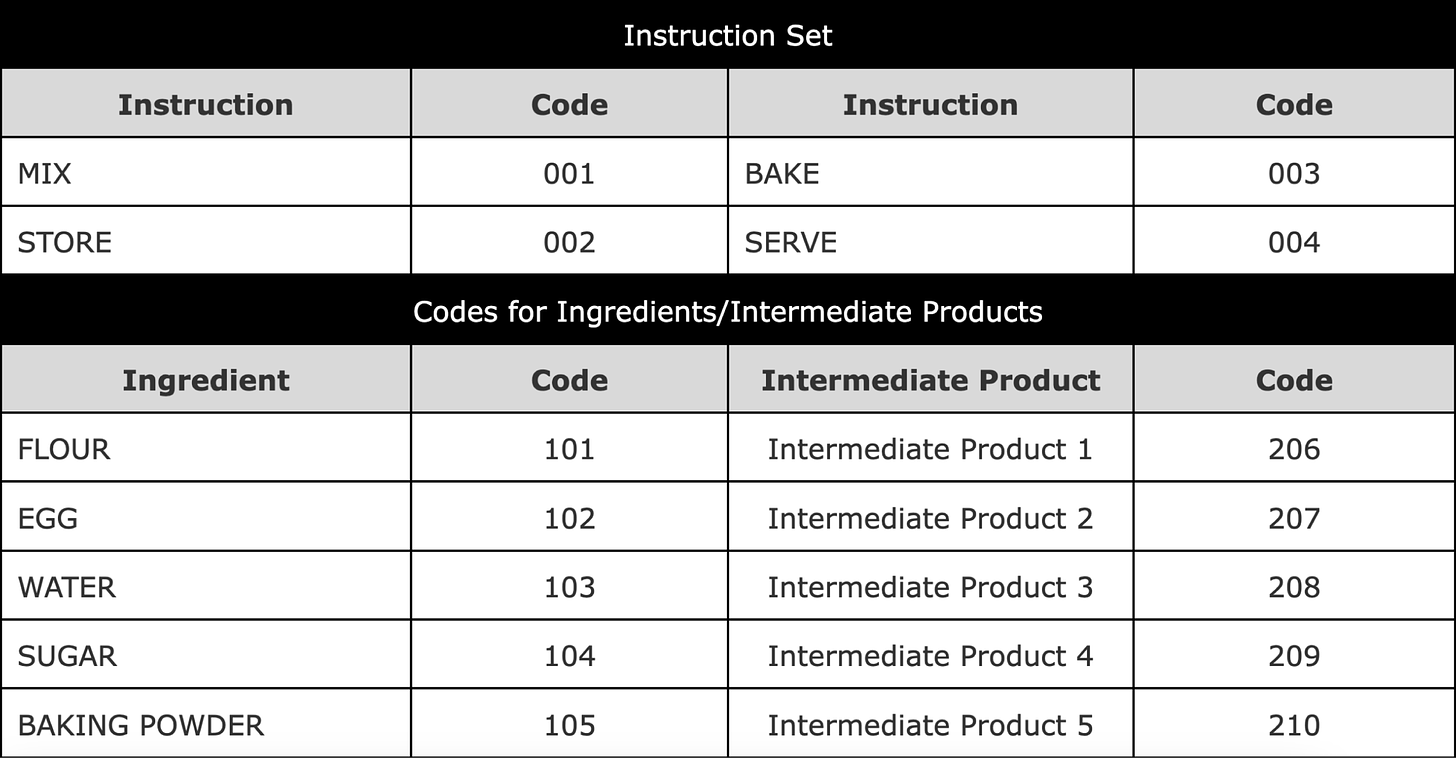

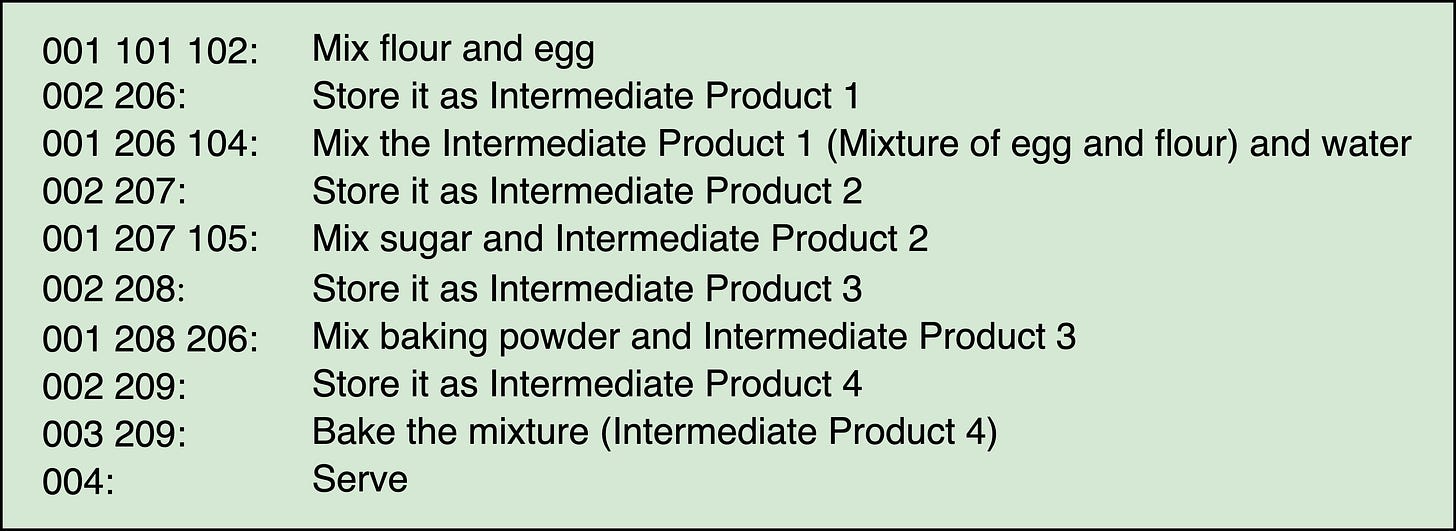

The following table shows a sample instruction set, or numeric codes for instructions and ingredients:

Note that different instructions require varying numbers of items to operate upon. For example, MIX requires two things to be mixed whereas BAKE requires only one item to be baked. Thus, the length of each statement corresponding to a recipe step will vary accordingly.

We can utilize this instruction set and ingredients to construct a recipe. A sample recipe for baking a cake follows:

But we wouldn't actually want to write our program this way — that's the compiler's job. To make it user-friendly, the translator will take our instructions in a human readable language and automatically convert it to the machine code.

Conclusion

There is unimaginable complexity hidden behind programming. But thanks to advancements in technologies such as compilers, more of us can enjoy programming without having to dive into its technical depths.

We can be incredibly grateful to the generations before us that have worked to develop the thousands of high-level programming languages that allow us to work in human-readable code as opposed to machine language.

No matter where you're at in your learning journey, understanding the "why" behind what we're doing is just as important as getting plenty of hands-on practice. As a reminder, you can find various hands-on, beginner-friendly courses and projects to build a strong foundation in programming with our hands-on Learn to Code resources.

Happy learning!

– Fahim